- Table of Contents

- Intro

- Product Selection

- Philosophy

- Testing

- Writing

- Videos and Further Reading

- How to Contact Us

- Comments

How We Test VPNs

Transforming Data Into Objective & Comparable Reviews

In 2025, we published reviews for 20 different VPN services. These reviews came after months of research, test development, and collaboration between our engineers, testers, writers, photographers, and editors. Our process for each review involves a lot of work behind the scenes, including rigorous checks and balances along the way, all to provide the most accurate and useful results to you. This article will provide a step-by-step look at how we test VPNs, from product selection to testing to review publication and beyond.

Product Selection

Unlike most products we review, we don't regularly subscribe to and test new VPNs. Instead, we subscribed to an initial batch of 20 of the most popular VPNs on the market and tested them all at once. This is because new VPNs don't release at the same frequency as physical products like TVs or mice. That said, we can still review new VPN services if we see they're popular online or if users (you!) ask us to review them. Don't hesitate to email us at feedback@rtings.com or drop a comment in our forums if there's a particular VPN you'd like to see us review.

Philosophy

How We Buy Products

As with all our reviews, we subscribe to all the VPN services we test using our own money, just like you would. We don't accept free subscriptions from brands. This way, we avoid custom software builds cherry-picked to excel in our test bench. While we may receive affiliate commissions from some VPN providers that we review, we aren't beholden to them, and they do not dictate how we score their services or whether or not we recommend them.

Standardized Tests

Every service we test undergoes the same testing process, so you can see how they perform on an even playing field. Testing products this way is the key to making our reviews useful for deciding which VPN to subscribe to. We also have tools like our side-by-side comparison and the table tool that you can use to view all the VPNs we've tested.

Testing

Our VPN reviews consist of three main categories: Security, Performance, and Features.

Security

Testing starts before we even subscribe to a VPN service. We evaluate the registration process to see what creating an account and paying for the service is like. This is a crucial part of the process because the VPN company could link the personal information that you provide, like your email address and password, to your IP address when you create your account. VPNs with the best privacy practices won't require you to sign up with an email address and password and assign you a randomly generated account number instead. We also note which payment methods the VPN accepts. Ideally, they would accept cryptocurrencies and even cash sent in the mail for better anonymity.

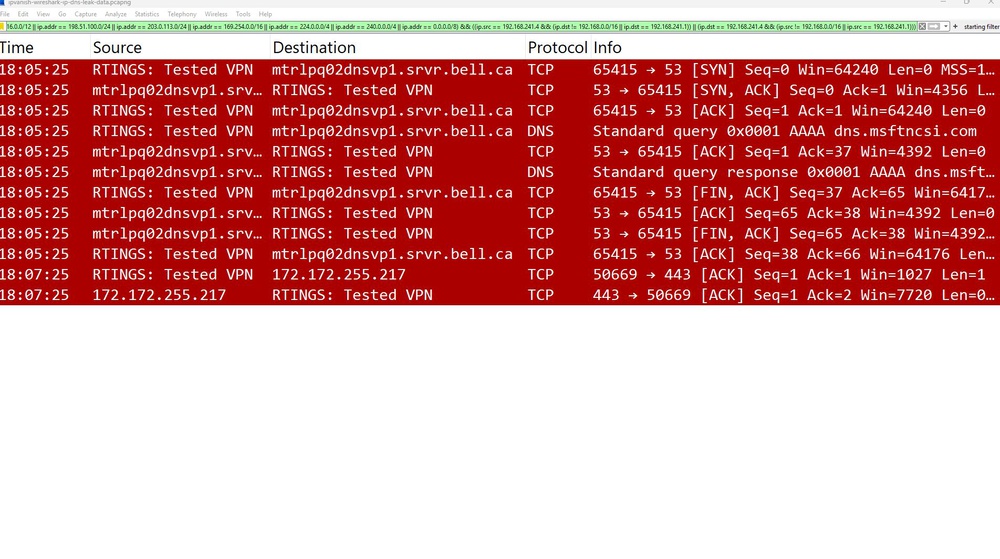

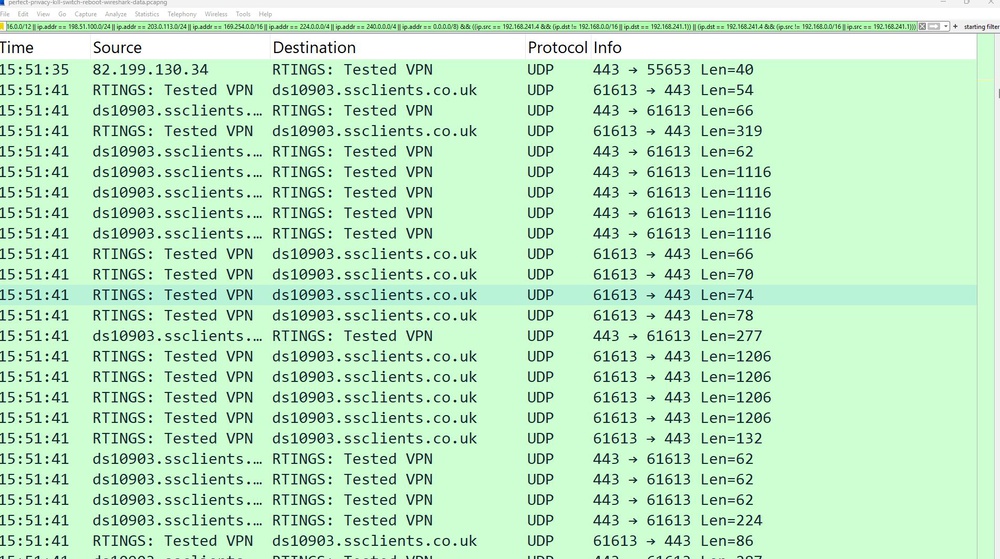

Next, we get to the meat of our security testing, where we see if a VPN really performs as advertised. One of the main reasons you might use a VPN is to mask your true IP address and DNS queries, which interested parties, like your ISP or potentially bad actors, can use to identify you. We test for leaks when the VPN is running normally using IPLeak.net's API and monitor system traffic with another computer running Wireshark.

We also test each VPN's kill-switch feature to see if it prevents your device from connecting to the internet outside of the VPN's encrypted tunnel in case the software isn't running, like when your computer boots up, if the software crashes, or when your computer reconnects to the internet. Interestingly, nearly every VPN in our initial batch failed this test. If you want to learn more, check out our R&D article.

Performance

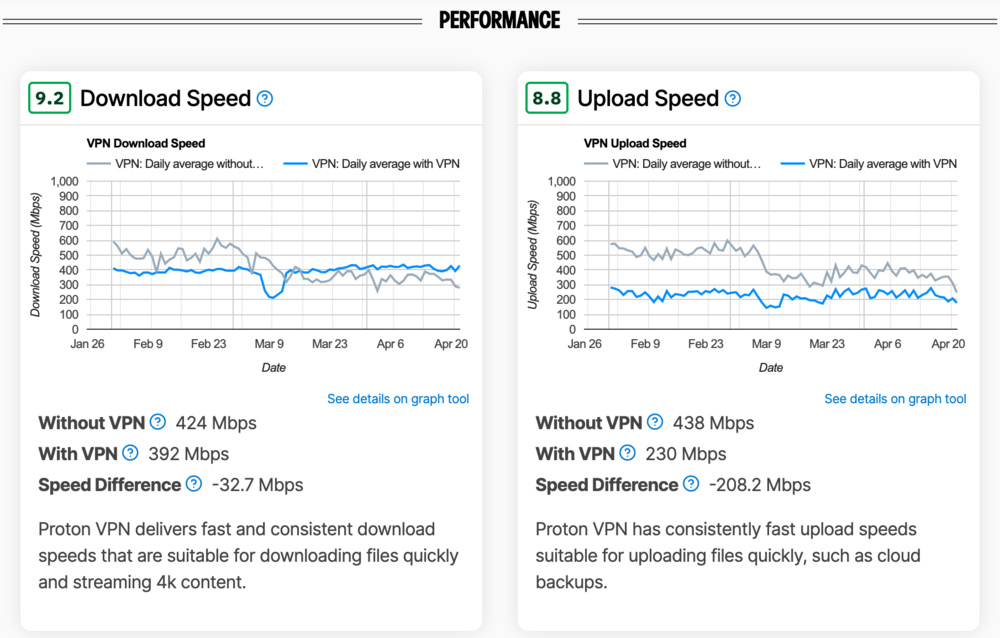

In this section, we evaluate the upload speed, download speed, and latency performance of each VPN. To conduct these tests, we install each VPN on a VPS (virtual private server) instance running Linux headlessly (VPNs that don't support Linux or headless installations use Windows with mouse and keyboard macros). We then run upload speed, download speed, and latency tests to speedtest.net servers on the East Coast of the US, West Coast of the US, London, UK, and Sydney, Australia. We run these tests multiple times per day with each VPN and calculate its average performance over the past three months. This shows how consistently each VPN performs and can reveal patterns about slow downloads or latency spikes.

Features

The final section is quite straightforward. We list several of the most important features that differentiate VPNs from each other. These include the platforms and protocols that are supported, how many devices you can connect simultaneously, how many countries have servers you can connect to, whether you can download torrent files, whether there's a download limit, whether you can configure the VPN headlessly, and whether it supports split tunneling.

Writing

Once testers complete their evaluation of a VPN, the first of two peer-review phases begins. In this initial review, writers and testers collaborate closely to validate the test results. Although this step isn't visible in the published review, it's essential for maintaining accuracy and quality. We cross-check our results against similar VPN services, our expectations, industry knowledge, and community feedback. If there's anything we're uncertain about, we conduct additional testing until we reach a consensus. Depending on the complexity, this process may range from a few minutes to several days. Once writers and testers agree on the results, we publish early access findings for our insiders and begin drafting the full review.

Each review starts with a clear introduction highlighting the service's standout features and key selling points. We then craft detailed sections tailored to specific use cases, clearly outlining how the VPN performs in various scenarios. Additional components include comparative boxes discussing feature variations, subscription tiers, and comparisons to similar VPN services available in the market.

While our test results are intended to be straightforward and self-explanatory for knowledgeable readers, we provide supplemental text to clarify complex results or unusual findings. This additional context may include comparisons to other services and technical explanations when necessary.

Once the first draft of the written review is finished, a second peer review stage occurs. Another writer reviews the content, providing feedback on clarity, readability, and accuracy. The tester who originally conducted the evaluations confirms all technical aspects, ensuring every statement accurately reflects the test results.

Finally, the review is sent to our editing team, who meticulously check formatting, consistency, and adherence to internal style guidelines. Their rigorous attention ensures the published review is polished, error-free, and meets the highest standards of quality our readers expect.

Recommendations

Our VPN recommendations are closely related to how we test and publish our individual reviews. These recommendations are curated lists designed to help people decide which VPN to subscribe to. The writers are always thinking about these lists and updating them frequently. We don't just look at which services score the best; we also factor in pricing, user satisfaction, and whether the company markets its products honestly and realistically.

Remember that our recommendations are only that: recommendations. We don't intend for them to serve as definitive tier lists for every possible user. We want our recommendations to be the most helpful for a non-expert audience or folks who want to know where to start when researching which VPN to buy.

Retests

Unlike other types of products we review, we can't resell VPN services, and we plan on staying subscribed to all our VPNs as long as they remain relevant. This proves advantageous since we always have access to each VPN we've tested in case we need to take another look at it. We might retest a VPN for several reasons, like responding to community requests about performance issues or when service providers release major software updates that can add new features or improve performance.

The retest process looks like our full review testing, but operates on a smaller scale. Testers conduct the retests, after which writers collaborate with testers to confirm the results. Writers then update the review with the new results, providing detailed context around the new findings. Once our editing team thoroughly checks and approves the updated content, we publish the revised version of the review. To maintain full transparency, we leave a public message to identify which changes were made, why we made them, and which tests were impacted.

Our test results are updated regularly since our performance tests (speed and latency) are ongoing. If there are any trends or scores that change significantly, we also update the text in the affected reviews so that you have an up-to-date picture of how consistently each VPN performs over time.

Videos and Further Reading

If you want to see what our review pipeline looks like, check out the video below. Note that it looks slightly different for VPNs since they're a software service rather than a physical product.

We also produce in-depth video reviews and recommendations using a similar pipeline of writers, editors, and videographers with multiple rounds of validation for accuracy at every turn. For mouse, keyboard, and monitor reviews in video form, see our dedicated RTINGS.com Computer YouTube channel.

For all other pages on specific tests, test bench version changelogs, or R&D articles, you can browse all our VPN articles.

How to Contact Us

Constant improvement is key to our continued success, and we rely on feedback to help us. We encourage you to send us your questions, criticisms, or suggestions anytime. You can reach us in the comments section of this article, anywhere on our forums, on Discord, or by emailing feedback@rtings.com.