- Table of Contents

- Intro

- Philosophy

- Review Testing, Writing, and Editing Process

- Conclusion

- Comments

How We Test Headphones

Transforming Data Into Objective Reviews

We've tested and reviewed over 825 pairs of headphones, so we know a thing or two about audio. Our goal is to provide trustworthy, objective, and detailed reviews to help you find the best pair of headphones for your individual needs. Here's a peek behind the curtain on how we test headphones in our lab. We don't cherry-pick the specific units we buy, and they're purchased from the same retailers as everyone else—no free test units from manufacturers here!

Our testing methodologies are developed by in-house experts and tailored to capture both real-world performance and push each product to its limits. These tests are designed to be repeatable, and every product is subjected to the same suite of measurements, even when it seems a bit funny to test how well open-fit headphones block out external noise. The purpose is to collect data for robust comparisons. We're not in the business of telling you how to use your headphones, though we have some suggestions and ideas.

If you prefer to get your information a little more dynamically, we've created the video below following the review process of the Sonos Ace Wireless.

Philosophy

HOW WE BUY

The first step is selecting which headphones to purchase and test. We buy our headphones from the same retailers as everyone else, like Amazon, Best Buy, B&H, Wal-Mart, and others, choosing products that are available in the United States. By purchasing our headphones from stores rather than accepting review units from manufacturers, we ensure that cherry-picked items don't influence our results and that we can maintain our independence.

Essentially, we take three approaches with headphone selection. Most of the time, we pick popular headphones that are either new models or longstanding favorites in the community, and we use various web and traffic tools to analyze that, alongside our team's knowledge of the products and the market. Otherwise, we'll intentionally acquire particular types of headphones for focused content in new or existing recommendation articles, like the best DJ headphones and the best wireless earbuds for running and working out. Finally, you can use our voting poll to decide what we test next if you're an insider.

The voting tool lets you directly influence which products we buy for reviews. Every 60 days, the poll is tallied, and the winner (with 25 or more votes) is purchased for testing and review. If you're part of our Insiders program, you get 10 votes; if you're not, you get one vote. These renew after each 60-day voting cycle.

Standardized tests

Once ordered, delivered, and unpacked, we begin the process with photographs taken in our lab under the same conditions, so you can see how they look out of the box.

Since our tests, reviews, and articles aim to gather and interpret objective data for comparisons across similar products, our testing methodologies are designed for consistency and repeatability in controlled settings. The advantage of our standardized testing is that it dispels marketing jargon and lets you easily compare products according to the features that matter most to you.

While not all tests are relevant for every product—open-fit earbuds, of course, will perform poorly in noise isolation tests—the point is to have a comprehensive set of metrics that let you compare all types of headphones in direct, side-by-side comparison, which you can do easily using our Compare tool.

In addition to our standard measurements, we'll also investigate and test unique features, and we try to monitor and address feedback from comments and the community on what you think is important to check and test out. We do our best to revisit existing reviews with retests or when we update our Test Bench methodology. Of course, not every consideration is solely objective, but that doesn't mean we can't find ways to address preferences objectively. We see with ardent fans of bass-heavy headphones, for example, that one target frequency response curve isn't perfect for everybody, which is why we introduced Sound Signatures with Test Bench 2.0 (which includes descriptors such as 'Boosted Bass'), so you can find headphones that suit your tastes.

For a quick reference across various usages, our table tool can help you narrow down your next pair of headphones, and you can customize the table's settings to cover features that are essential for you.

We have hundreds of tests belonging to six categories: Sound, Design, Isolation, Microphone, Active Features, and Connectivity.

| Sound | Design | Isolation | Microphone | Active Features | Connectivity |

| Sound Profile | Style | Noise Isolation - Full Range | Microphone Style | Battery | Wired Connection |

| Frequency Response Consistency | Comfort | Noise Isolation - Common Scenarios | Recording Quality | App Support | Bluetooth Connection |

| Raw Frequency Response | Controls | Noise Isolation - Voice Handling | Noise Handling | Wireless Connection (Dongle) | |

| Bass Profile: Target Compliance | Portability | ANC Wind Handling | PC Compatibility | ||

| Mid-Range Profile: Target Compliance | Case | Leakage | PlayStation Compatibility | ||

| Treble Profile: Target Compliance | Build Quality | Xbox Compatibility | |||

| Peaks/Dips | Stability | Base/Dock | |||

| Stereo Mismatch | In The Box | ||||

| Group Delay | |||||

| Cumulative Spectral Decay | |||||

| PRTF | |||||

| Harmonic Distortion | |||||

| Electrical Aspects | |||||

| Virtual Soundstage | |||||

| Test Settings |

Sound

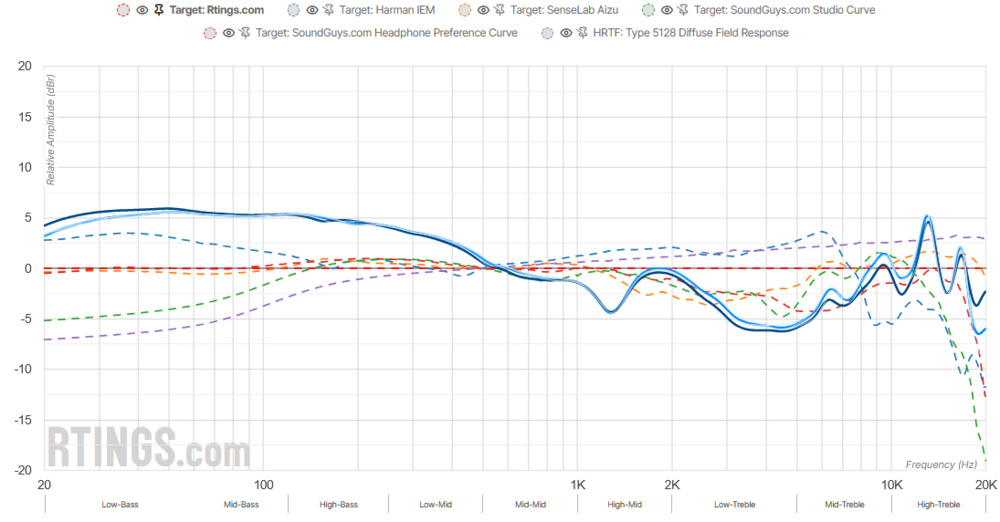

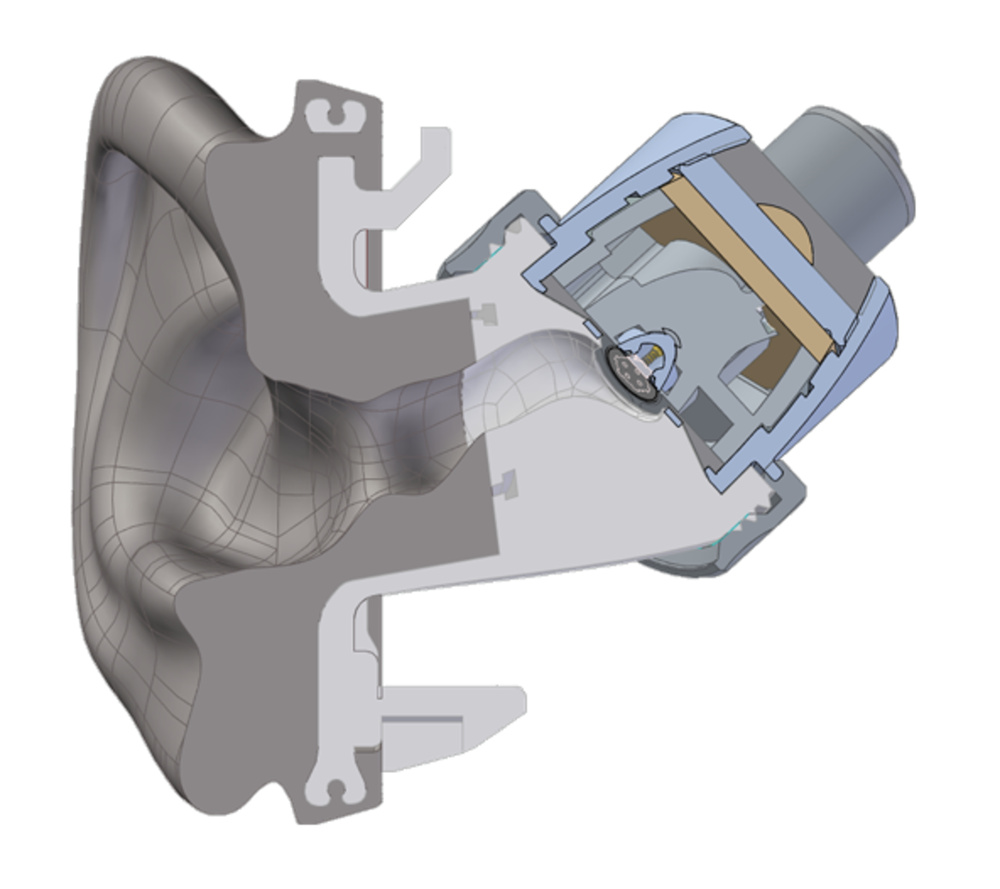

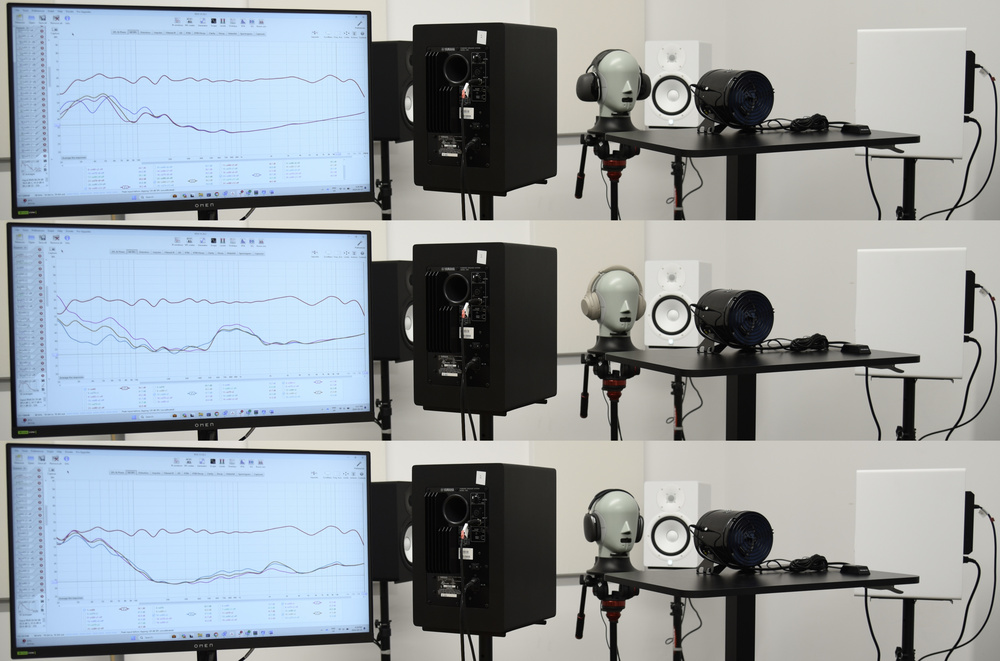

At the core of every pair of headphones is how they sound, and we use our Brüel & Kjær (B&K) Type 5128-B Head and Torso Simulator and Audio Precision APx517B Acoustic Analyzer testing rig for most of our tests in a room with minimal ambient noise. The headphones are calibrated to 94 dB/SPL with a normalized test tone. Many of our sound tests utilize the same two-second-long 20Hz-20kHz log sweep from Audio Precision APx517B fed into the headphones, and then the reproduced signal from the headphones is recorded via the B&K ear simulator (type 4620) on the test head, and sent to the Acoustic Analyzer to process the results. These tests are performed with multiple passes.

About Preference-based and performance-based tests

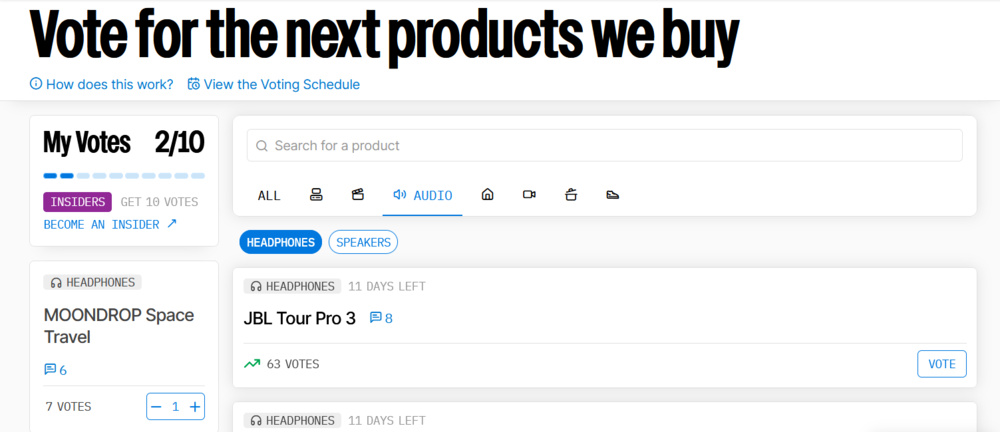

There's an old adage that goes, 'there's no accounting for taste,' and we disagree. Considerations like taste are subjective, but we can mostly account for them, especially when it comes to tests like Raw Frequency Response. Certainly, there's a lot of existing research on what makes the perfect sounding frequency response curve, and we've even created our own target curve. However, in truth, there's no single ideal frequency response that's suitable for every person.

Our solution for addressing sound preference is to offer you the flexibility to choose your preferred frame of reference by adding more frequency response targets that are respected and researched. As the headphones' measured frequency response is obtained through controlled and objective means, this isn't simply a case of pure subjectivity. Extra target curves allow for variation and preferences between individuals. As of Test Bench 2.0, you can use the graph tool (or click through graphs in the review) to swap out our target curve for another.

Tests like Sound Profile make it even easier for you to understand a frequency response in relation to our target (or another), and provide additional tools like Sound Signatures to interpret the data and compare the general sound of different pairs of headphones. Similarly, our Target Compliance sections for bass, mid-range, and treble default to our target curve so you can better see all fluctuations in the response, but if you click through the graphs in these boxes, you can add any of the other curves for comparison as well.

In contrast, the majority of our measurements are based on objective performance like Peaks/Dips and Harmonic Distortion. For these, there are clearly good results that most users will be happy with, and bad results that negatively impact everyone's listening experience. While what's objectively 'bad' or 'good' performance and how we obtain that data can change with innovative research developments, these measurements are otherwise clear-cut. Other tests like Stereo Mismatch, Group Delay, Cumulative Spectral Decay, PRTF, Electrical Aspects, and Virtual Soundstage all address various important features from how well each left and right driver matches each other to detailing software-based 3D audio available on select headphones using objective tests and the rig.

It's worth highlighting one of our most unique tests, Frequency Response Consistency. These results show how closely the headphones sound on different humans with natural variations when compared to the measured Raw Frequency Response with the B&K rig.

Before the headphones are seated on people, tiny lab microphones are placed in people's ears with various characteristics, such as long hair, glasses, small, average, and large heads. The same two-second 20Hz to 20kHz log sweep from Audio Precision APx517B plays through the headphones, and the mics capture the sound on the individuals' headphones. The recorded audio is sent back to the Acoustic Analyzer for processing. The measured results show how closely the headphones sound on different humans with natural variations when compared to the measured Raw Frequency Response with the B&K rig. This test is performed multiple times.

|  |

While the B&K ear simulator (type 4620) is designed to mimic human ear anatomy, we also account for natural differences between people by using in-ear lab mics to compare the results for consistency. | |

design

While sound is important, it doesn't matter if your headphones are uncomfortable or can't withstand the rigors of everyday use. Our Design tests go through the user experience with headphones as a material object: how they look, how tightly or loosely they fit, whether you can easily take them on the go, if they'll stay put, and if the controls are intuitive and work well. We check for features like material quality, dust and waterproofing, accessories such as ear tips, sturdy hinges, and gauge whether you can go to the gym and expect them to stay on—to name just a few tests.

isolation

We've developed several ways to measure the noise isolation performance of headphones. These tests use the B&K test head to demonstrate the passive noise isolation of the headphones and, if there's active noise cancellation, its efficacy when blocking full-spectrum noise, sampled noise found in common noisy environments, and wind noise. These tests all come with accompanying recorded audio clips so you can glean a sense of what the scores and graphs mean audibly, and this overview merely scratches the surface.

microphone

For headphones with microphones, we use the same voice sample to record a demonstration of the mic's sound by having it playback from a speaker located where a person's mouth is, and then use the headphones' microphone to capture the audio. The audio is available in the review, so you can assess its quality yourself. For instance, here's how the Audeze Maxwell Wireless boom mic system sounds without the introduction of noise. A frequency response graph provides a visual guide for how accurately the microphone captures sound in Recording Quality.

For Noise Handling, we run the same voice sample for two tests with different types of noise recordings playing from speakers in the room to test how well the microphone system separates and prioritizes voices above background noise. Here's the same Audeze Maxwell Wireless demo with noise. We do the same process to record how effectively the mic rejects real-world sounds like other people talking nearby and subway trains with a standardized noise soundtrack, as with the Audeze Maxwell Wireless again. The graphs and accompanying demos give you a sense of how well a given pair of headphones performs if you take a lot of calls or want a gaming headset with a dedicated microphone.

active features

This section applies only to headphones that require active power from batteries. When it comes to assessing the Battery itself, we check the continuous battery life by calibrating the headphones to output at the same loudness and then record the process with the same audio loop on repeat until the headphones are drained. In addition, this section notes other features such as power-saving modes and how long a dead battery takes to fully recharge.

App Support addresses all of the functions and compatibility housed in the headphones' app (if there is one). Usually, we'll experiment with extra features like graphic equalizers, adjustable active noise cancellation modes, and controls remapping, to give some important examples of what this section encompasses. It's one thing to list the features an app has, but we'll also frequently include a video capture of the app to show its interface and features at the time of the initial review testing, to better demonstrate the user experience.

Connectivity

Connectivity breaks down the various ways different types of headphones can connect to devices by using separate boxes for each method of connection: Wired, Bluetooth, and Wireless (Dongle). We don't simply list specifications. We actually test features like OS-specific Bluetooth codecs and perform multiple passes for metrics like latency. Specifications are just a baseline for our expectations of what the manufacturer claims about the headphones, and it's our job to see how those claims hold up under the scrutiny of hands-on testing.

Some data in this section can be sparse, as with passive headphones that use an analog 1/4" headphone jack and exhibit nearly zero latency. However, there's a lot to dig into with headphones that support multiple methods of connection, like gaming headsets with Bluetooth and wireless 2.4GHz connections, for example, where low-latency or Bluetooth codecs are important when making a buying decision. Features such as Bluetooth multi-point and connection range are just a couple of the key points we test by systematically going through and seeing limitations or parameters around which a connection operates. Concretely, if a pair of earbuds has Bluetooth multi-point pairing, we'll go test it out to see if that's true, and if there are exceptions, like whether that's the case if the earbuds are connected via the LDAC codec or SBC codec.

|  |

Sometimes connectivity is as easy as a high-quality cable, as with the Audio-Technica ATH-ADX3000. | Other times, connectivity and device compatibility is much more complicated, as with gaming headsets (Astro A50 X's dock pictured above). |

In addition, this section also acts as a quick reference for compatibility with PCs, PlayStation, and Xbox. We ensure this information is accurate by using the headphones with our PCs and gaming consoles to check the functionality, rather than trusting that it works because the box says so. Since many headphones, particularly gaming headsets, come with a dock needed for connecting to a PC or gaming console, Base/Dock is a reference for the features and limitations of a given headset's dock.

Review Testing, Writing, and Editing Process

Behind each review is a team of real people; we don't use AI. Once this rigorous series of tests is completed, the results are peer-reviewed by a second tester. This process ensures that the results make sense with the product specifications, community expectations, and real-world usage. It's an opportunity to ask questions. After this step, the review's writer goes through the measurements for an additional validation step. It's only after these checks that we release the initial measurements to Early Access; it's one of the perks of being an Insider!

Both testers and writers are skilled at knowing why a pair of headphones is used, and we can continue to use the products we test. Just like our testers are trained and experienced with headphones and the specialized gear used for our headphone measurements, our writers have complementary experience and knowledge. Their job is to translate and bridge the gap between the data and our readers; they lend context and meaning to our results. They also highlight important features that might otherwise be buried in the data and, when needed, make connections between test results that interact with each other.

Once the writer completes their initial draft, the review is validated yet again by another writer as well as the tester who took the measurements. This step ensures our content is understandable, meaningful, and aligned with the data. Next, the completed review is handed over to our editing team, who check that the writing quality is consistent with our site's internal style guide, is free of mistakes, and displays correctly on the site. These steps make certain that each review is well-rounded, representative of the product, complies with our standards, and is comparable to other headphone reviews on the site.

Recommendations

Once the writers have the review results, they go through our recommendation articles to see if the reviewed pair of headphones earns a spot on any list. Unlike our deep dive reviews, a recommendation article serves as a roundup of the best products in a category, and we update these frequently to reflect market changes.

While scores play a part, they're not the only factor when choosing products. Availability, value, price, compatibility, specialized or niche features, and overall quality of experience with the product are just a few of the variables we consider. Plenty of great headphones don't make these lists, and while we go to great lengths to ensure the products we choose to highlight are the best for the specific recommendation article, these are meant as guides to help you make a decision. Basically, you still know what you like best, and therefore, which features are most important to you.

It's important to mention that if you choose to click through a link from one of our affiliate partners to purchase a pair of headphones, we earn a small commission from the retailer (at no additional cost to you), which helps us fund future reviews and articles. However, our affiliate partners don't influence our picks; we retain editorial independence. As an extra safeguard, the department that handles relations with affiliate retailers doesn't influence our reviews or recommendation articles. Our goal is to help you, and in a world full of sponsored content and career creators coasting on freebies with clickbait content, we still believe in the value of consistency, transparency, and earning your trust through hard work and objective measurements. Plus, if you choose to join our Insiders program, you can ask us for tailored buying advice.

Retests and updates

One upside of buying our own pairs of headphones is that we can revisit results at a later date with updated testing methodologies or perform retests when major firmware updates occur. We're also receptive to comments and suggestions if there's a special feature or use case that the original review didn't cover. If you leave a comment at the bottom of a review, we'll frequently follow up with a retest, which can make its way into an update to the review, and get a reply from someone on the team.

The retest process is similar to the initial review process. Once a retest is flagged, the tester performs the retest and validates the results with a second tester. Then, a headphones writer makes appropriate changes to the review before the editing team ensures the changes make sense and comply with our guidelines.

Buying our own headphones to test gives us the flexibility to handle updates to products and address new developments within the wider audio community. Our reviews aren't frozen in time at the date of their initial publication, as is the case for other publications with reviews that only borrow headphones for a short term. That said, while we keep headphones in our inventory for quite a while—often years—we'll sometimes choose to resell products once our teams of writers, testers, and test developers determine that, for a variety of reasons (such as availability) a pair isn't going to yield additional value to our readers.

Conclusion

Thanks for sticking around from start to finish in this overview of our headphones test and review process. For more detailed breakdowns of each metric and the test bench methodologies, please check out our dedicated headphones articles. You can always click the 'Learn About [Test]' at the bottom of each test box in our headphones reviews to get into the details of the measurements, too.

If you have any questions, feedback, or suggestions, contact us in the comments sections, the forums, on Discord, or email feedback@rtings.com.